TL;DR – it’s not great

Search engines are handy. They’re good for finding things when you search for those things. They’re also really influential in our lives, in surprising ways.

In 2015, a study in the hiliariously-acronymed PNAS was published to determine if it was possible to manipulate voters’ opinions about political candidates by altering search engine results pages (SERPs) to favor specific candidates.

The results were fascinating (emphasis mine):

We predicted that the opinions and voting preferences of 2 or 3 per cent of the people in the two bias groups – the groups in which people were seeing rankings favouring one candidate – would shift toward that candidate. What we actually found was astonishing.

The proportion of people favouring the search engine’s top-ranked candidate increased by 48.4 per cent, and all five of our measures shifted toward that candidate. What’s more, 75 per cent of the people in the bias groups seemed to have been completely unaware that they were viewing biased search rankings. In the control group, opinions did not shift significantly.

This seemed to be a major discovery. The shift we had produced, which we called the Search Engine Manipulation Effect (or SEME, pronounced ‘seem’), appeared to be one of the largest behavioral effects ever discovered.

Over the next year or so, we replicated our findings three more times, and the third time was with a sample of more than 2,000 people from all 50 US states. In that experiment, the shift in voting preferences was 37.1 per cent and even higher in some demographic groups – as high as 80 per cent, in fact.

This is an important finding. It’s important for conspiracy theorists and other tinfoil-hatters who think that Google would actually care enough to rig specific keyword results about candidates (for lots of different reasons, they don’t).

It’s also an important finding in the context of thinking about knowledge discovery in concept areas that are new, or not well defined yet.

Bots are a great example.

Right now, Google operates a bit like a rearview mirror – it’s surfacing results from old, authoritative content pieces that were written years ago.

As a result, the SERPs reflect the opinions and editorial slants of writers years ago, and these writers had a very definite point of view – namely, that bots were bad.

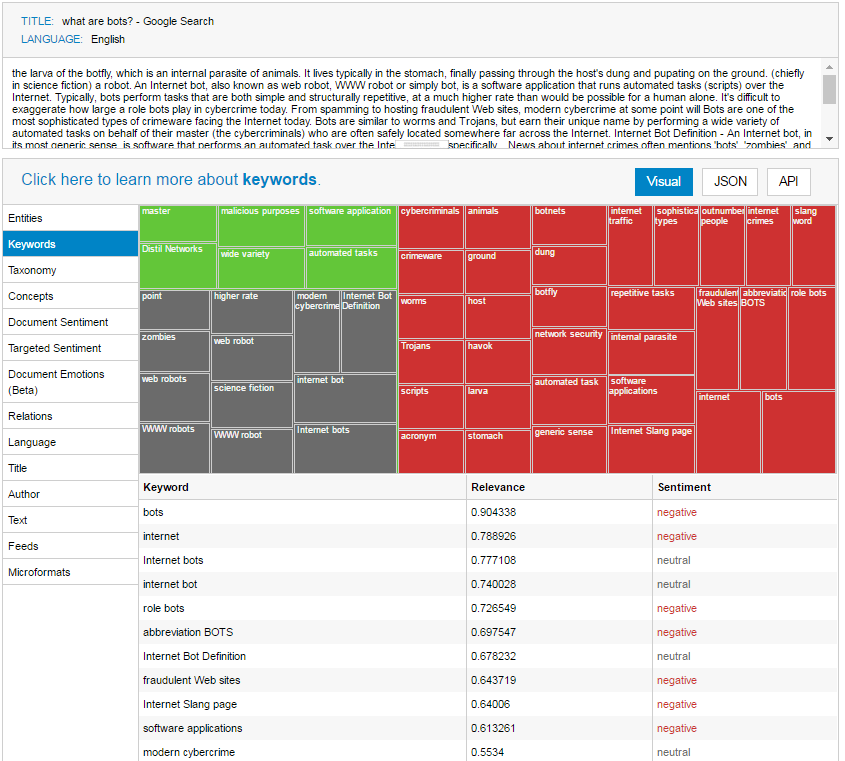

I Googled “what are bots?” and looked at the SERP. A quick read shows that 5 of the top 10 are clearly negative. To dig a bit deeper, I fed the SERP into AlchemyAPI to get analysis of specific keywords. Here it is:

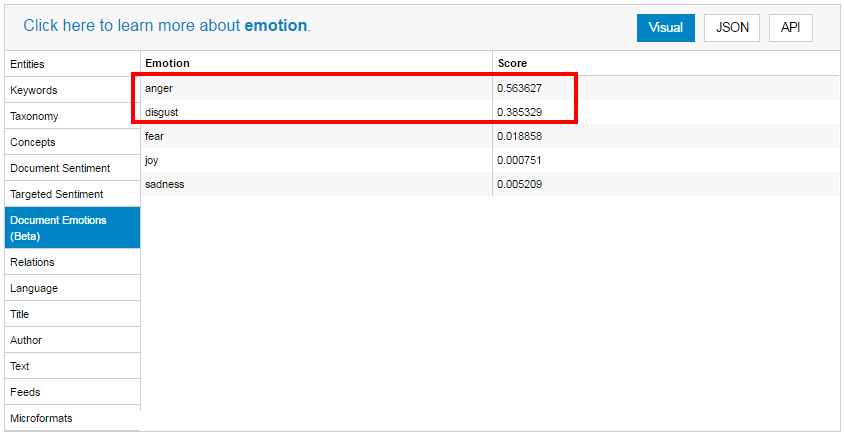

Just a quick look shows the red (negative) concepts vastly outnumber the green (good). If we get a bit more abstract and look at what emotions are being expressed on the page, it looks even worse:

Anger and disgust are the emotions most associated with “what are bots”.

This is a problem for people who operate in the world of bots (like us). Basically, Google is showing the world a very one-sided view of what bots are, and what bots “mean” to the world at large, and it’s a bad-looking view. Google isn’t “doing” this in the sense that they’re actively shaping the SERPs to be negative. They’re just giving that rear-view mirror perspective of how people have historically talked about bots. And the discussion hasn’t been positive.

Given how much SERPs affect the public’s opinion, all of us in the industry need to talk more, demonstrate more, and publish more, showcasing all the good things that bots can accomplish. Only when the momentum of the conversation and published content shifts toward the positive will the SERPs start to shift, and public opinion along with it.

Recent Comments