Ethical Considerations

Investigating the surprisingly deep implications of bot behaviorHow often do you think about the ethical ramifications of technology? If you’re like me, the answer is “almost never”. Technology arrives, and I decide whether or not to use based on how I feel about it – gut reaction. Only later do I construct rational arguments about why my decision was the right one.

“Decide emotionally first, rationalize later” is a very common system for human decision making, but it might be a uniquely dangerous approach in relation to machine intelligence.

In this article, we’ll discuss the ethics of machine intelligence, because it’s important. We’re going to be interacting with these systems a lot in the very near future, so we should make concious choices about how we build them and use them.

Bot ethics

Let’s just get this part out of the way:

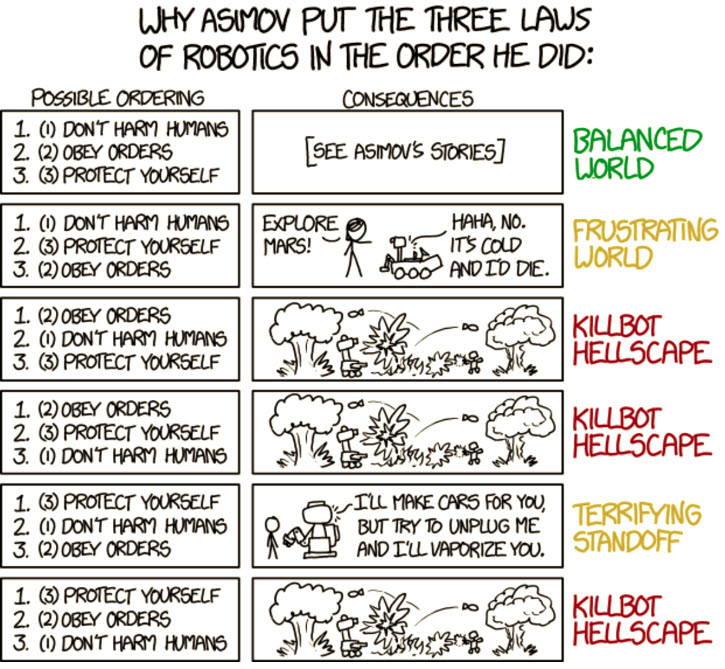

- A robot may not injure a human being or, through inaction, allow a human being to come to harm.

- A robot must obey the orders given it by human beings except where such orders would conflict with the First Law.

- A robot must protect its own existence as long as such protection does not conflict with the First or Second Law.

Those, of course, are Asimov’s Three Laws of Robotics. Asimov first used them in a short story in 1942, and they’ve been a cultural reference point for governing the behavior of robots (i.e. self-directed computers) ever since.

Thinking about bots in an ethical context might not feel natural – it didn’t to me, initially. I was just thinking of bots as software that interacts with other software. So long as I’m not programming my software to interact maliciously with your software, we’re cool, right?

Wrong, of course. The interactions between bots, humans, and back-end systems can be very complex, and can expose all kinds of opportunities for bad behavior.

Amir Shevat, lead for developer relations at Slack, has proposed slightly modifying the 3 Laws to make them more bot-specific:

1. Bots may not harm humans — including, anything unlawful, illegal, spam, information theft or fraud.

2. Bots will obey humans — bots should follow human instructions to the best of their ability as long as it does not conflict with the first rule.

Amir later went on to discuss some of the questions around bot ethics, which I’ll paraphrase:

Ownership

- Bots should clearly state which party (bot developer or user) owns data shared with the bot.

Privacy

- Users should be able to find out if their personal information is being shared in any way by the bot or bot developer.

Use of data for advertising

- A bot should not serve advertising “unless it has a strong, express purpose that benefits the user”.

- I think that this is a noble (but laughable) goal. Bots will gather data, and it will be passed back-and-forth with advertisers.

- For example, Facebook Messenger has already experimented with using bots to get users to interact with Sponsored content.

- Similarly, Facebook Messenger offers ad formats for marketing bots. We should expect this will be a two-way street. People will use advertising to publicize their bots, and bots will advertise to people.

Abuse

- Developers should have a polite canned response for when users yell or curse at the bot.

- Because bots should not harm humans, they should not respond to abuse from humans.

Bots impersonating humans

- Bots should not impersonate humans, nor should humans impersonate bots.

All of these are perfectly nice ideas. However, I don’t believe that we should expect any web-scale software to have our best interests at heart. It’s a nice idea, it’s just impractical. Individual actors and businesses will build bots, and some of those bots will be bad.

The problem with ethical considerations is the issue of enforceability. I.e., if someone builds a bad bot, who is in a position to make some sort of meaningful corrective action? I think the answer to that is the bot platforms themselves.

Bot platforms are in a uniquely powerful position, as they control access. If a bot violates any ethical guidelines, there should be a clear path from violation to a user being able to report that violation, and block the bot if necessary.

This information can be monitored by the platform, and finally result in taking corrective action with the bot developer if bad behavior persists. Too many violations, too many blocks by users, and the bot gets shut down. If your bot gets shut down too many times, you can’t develop or publish bots on that platform.

Easy to say, probably very difficult to build in a way that’s not immediately open to abuse by exactly the kind of non-ethical operators who you would want to ban…

Ethics for Artificial Intelligence

Bots represent a leading edge of human and AI interaction. As a result, any discussion around ethics and bots will have at least some overlap with larger discussions around what the ethics of artificial intelligence should be, as some (but not all) bots use AI, and we should expect that proportion to grow.

There’s been a lot of activity around ethics in relation to AI, with some calling it “a kind of ethics fever“. Early in 2017, many of the biggest names in AI research came together for the Asilomar Beneficial AI Conference. Attendees included notables like Elon Musk from Tesla, Demis Hassabis from DeepMind, Yan Le Cunn from Facebook, and Ray Kurzweil from Google. The conference also attracted big names from outside technology like Nick Bostrom from Oxford, and Sam Harris, as well as a murderer’s row of notable AI researchers.

What struck me was the purpose of this conference: “in which people from various AI-related fields hashed out opportunities and challenges related to the future of AI and steps we can take to ensure that the technology is beneficial.” That so many people at the forefront of AI research and development got together specifically to talk about the future of AI simply underlines the stakes of what’s being discussed. AI has the potential to be an epoch-defining technology, and we can’t just think about it in terms of the logical boundaries of what it can and can’t do. We must consider the ethical ramifications of what AI might mean to the human race.

The most interesting output was the Asilomar AI Principles document, which outlines 23 principles to guide the development of AI in the future.

Artificial intelligence has already provided beneficial tools that are used every day by people around the world. Its continued development, guided by the following principles, will offer amazing opportunities to help and empower people in the decades and centuries ahead.

Research Issues

1) Research Goal: The goal of AI research should be to create not undirected intelligence, but beneficial intelligence.

2) Research Funding: Investments in AI should be accompanied by funding for research on ensuring its beneficial use, including thorny questions in computer science, economics, law, ethics, and social studies, such as:

- How can we make future AI systems highly robust, so that they do what we want without malfunctioning or getting hacked?

- How can we grow our prosperity through automation while maintaining people’s resources and purpose?

- How can we update our legal systems to be more fair and efficient, to keep pace with AI, and to manage the risks associated with AI?

- What set of values should AI be aligned with, and what legal and ethical status should it have?

3) Science-Policy Link: There should be constructive and healthy exchange between AI researchers and policy-makers.

4) Research Culture: A culture of cooperation, trust, and transparency should be fostered among researchers and developers of AI.

5) Race Avoidance: Teams developing AI systems should actively cooperate to avoid corner-cutting on safety standards.

Ethics and Values

6) Safety: AI systems should be safe and secure throughout their operational lifetime, and verifiably so where applicable and feasible.

7) Failure Transparency: If an AI system causes harm, it should be possible to ascertain why.

8) Judicial Transparency: Any involvement by an autonomous system in judicial decision-making should provide a satisfactory explanation auditable by a competent human authority.

9) Responsibility: Designers and builders of advanced AI systems are stakeholders in the moral implications of their use, misuse, and actions, with a responsibility and opportunity to shape those implications.

10) Value Alignment: Highly autonomous AI systems should be designed so that their goals and behaviors can be assured to align with human values throughout their operation.

11) Human Values: AI systems should be designed and operated so as to be compatible with ideals of human dignity, rights, freedoms, and cultural diversity.

12) Personal Privacy: People should have the right to access, manage and control the data they generate, given AI systems’ power to analyze and utilize that data.

13) Liberty and Privacy: The application of AI to personal data must not unreasonably curtail people’s real or perceived liberty.

14) Shared Benefit: AI technologies should benefit and empower as many people as possible.

15) Shared Prosperity: The economic prosperity created by AI should be shared broadly, to benefit all of humanity.

16) Human Control: Humans should choose how and whether to delegate decisions to AI systems, to accomplish human-chosen objectives.

17) Non-subversion: The power conferred by control of highly advanced AI systems should respect and improve, rather than subvert, the social and civic processes on which the health of society depends.

18) AI Arms Race: An arms race in lethal autonomous weapons should be avoided.

Longer-term Issues

19) Capability Caution: There being no consensus, we should avoid strong assumptions regarding upper limits on future AI capabilities.

20) Importance: Advanced AI could represent a profound change in the history of life on Earth, and should be planned for and managed with commensurate care and resources.

21) Risks: Risks posed by AI systems, especially catastrophic or existential risks, must be subject to planning and mitigation efforts commensurate with their expected impact.

22) Recursive Self-Improvement: AI systems designed to recursively self-improve or self-replicate in a manner that could lead to rapidly increasing quality or quantity must be subject to strict safety and control measures.

23) Common Good: Superintelligence should only be developed in the service of widely shared ethical ideals, and for the benefit of all humanity rather than one state or organization.

The objective of the Partnership on AI is to address opportunities and challenges with AI technologies to benefit people and society. Together, the organization’s members will conduct research, recommend best practices, and publish research under an open license in areas such as ethics, fairness, and inclusivity; transparency, privacy, and interoperability; collaboration between people and AI systems; and the trustworthiness, reliability, and robustness of the technology. It does not intend to lobby government or other policymaking bodies.

Recognizing the vast potential of artificial intelligence to affect the public interest, the John S. and James L. Knight Foundation, Omidyar Network, LinkedIn founder Reid Hoffman, and others have formed a $27 million fund to apply the humanities, the social sciences and other disciplines to the development of AI.

Resources for AI Educators

Finally, educators in the field of artificial intelligence are grappling with how they should create or modify course curricula to give students an understanding of the ethical issues that AI raises.

In 2014, Stanford commissioned its 100 Year Study on Artificial Intelligence (AI100).

The overarching purpose of the One Hundred Year Study’s periodic expert review is to provide a collected and connected set of reflections about AI and its influences as the field advances. The studies are expected to develop syntheses and assessments that provide expert-informed guidance for directions in AI research, development, and systems design, as well as programs and policies to help ensure that these systems broadly benefit individuals and society.

The AI100 recently came out with its 2016 summary report, which is extremely detailed, and provides excellent background on how AI is likely to affect fields ranging from transportation to elder care to employment.

Another recent paper that represents some of the most complete thinking I’ve come across is “Ethical Considerations in AI Courses”, and it’s written by researchers from USC, University of Kentucky, and IBM Watson.

Engineering education often includes units, and even entire courses, on professional ethics, which is the ethics that human practitioners should follow when acting within their profession.

Advances in AI have made it necessary to expand the scope of how we think about ethics; the basic questions of ethics — which have, in the past, been asked only about humans and human behaviors — will need to be asked about human-designed artifacts, because these artifacts are (or will soon be) capable of making their own action decisions based on their own perceptions of the complex world.

How should self-driving cars be programmed to choose a course of action, in situations in which harm is likely either to passengers or to others outside the car? This kind of conundrum — in which there is no “right” solution, and different kinds of harm need to be weighed against each other — raises practical questions of how to program a system to engage in ethical reasoning.

It also raises fundamental questions about what kinds of values to assign to help a particular machine best accomplish its particular purpose, and the costs and benefits that come with choosing one set of values over another.

The article includes discussion of the following points:

2.1 How Should AIs Behave In Our Society?

2.2 What Should We Do If Jobs Are In Short Supply?

2.3 Should AI Systems Be Allowed to Kill?

2.4 Should We Worry About Superintelligence and the Singularity?

2.5 How Should We Treat AIs?

2.6 Tools for Thinking About Ethics and AI

3.1 Deontology

3.2 Utilitarianism

3.3 Virtue Ethics

3.4 Ethical Theory in the Classroom: Making the Most of Multiple Perspectives

4.1 Case Study: Elder Care Robot

4.2 Case Study 2: SkyNet

4.3 Case Study 3: Bias in Machine Learning

5.0 Teaching Ethics in AI Classes

5.1 Ethical Issues

5.2 Case Studies

5.3 Teaching Resources

The paper has copious references, notes and links to external resources. It’s a fantastic starting point for educators.

Plus, any time you have a section called “How Does Ethical Theory Help Us Interpret Terminator 2?”, you’ve probably got some content that’s going to get your class’s strict attention 😉